All code (minus passwords) will be published in the associated Github Repo

As mentioned in part 1, my application has not one, but three backends!

- Postgres for relational data

- Influxdb for performance and time-series data

- Redis to pass data in queues between Celery workers

Neither of these applications is “stateless”, so we can’t treat them like cattle and scale them up or down as needed

Stateful Sets

Kubernetes introduces the idea of Stateful Set for services that need to be in a defined state with defined names.

Let’s create a Stateful Set for postgres, defining a few labels and a PersistentVolumeClaim to reserve up to 20Gb for the database data:

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: postgres

labels:

app.kubernetes.io/name: postgres

app.kubernetes.io/component: postgres

app.kubernetes.io/part-of: metamonitor

spec:

selector:

matchLabels:

app: metamonitor # has to match .spec.template.metadata.labels

component: postgres

serviceName: "postgres"

replicas: 1 # by default is 1

minReadySeconds: 10 # by default is 0

template:

metadata:

labels:

app: metamonitor

component: postgres

spec:

terminationGracePeriodSeconds: 10

containers:

- name: postgres

image: docker.io/postgres:15

ports:

- containerPort: 5432

name: sql

volumeMounts:

- name: postgres-data

mountPath: /var/lib/postgresql/data

volumeClaimTemplates:

- metadata:

name: postgres-data

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 5Gi

We can load this file either via web or with microk8s kubectl apply -f filename.yaml and we’ll see that a Stateful Set is created, which in turn creates a Pod with a Postgres container in it.

But it will fail, as we haven’t passed any information such as password or DB name

The Official Postgres image says we have to pass three environment variables: POSTGRES_USER, POSTGRES_PASSWORD, POSTGRES_DB

Let’s do it, then.

template:

spec:

containers:

- name: postgres

env:

- name: POSTGRES_USER

value: "antani"

- name: POSTGRES_PASSWORD

value: "antanipass"

- name: POSTGRES_DB

value: "antanidb"

Save, apply

LOG: database system is ready to accept connectionsHooray!

But now our password is in plain sight in the config file. That’s bad.

Secrets

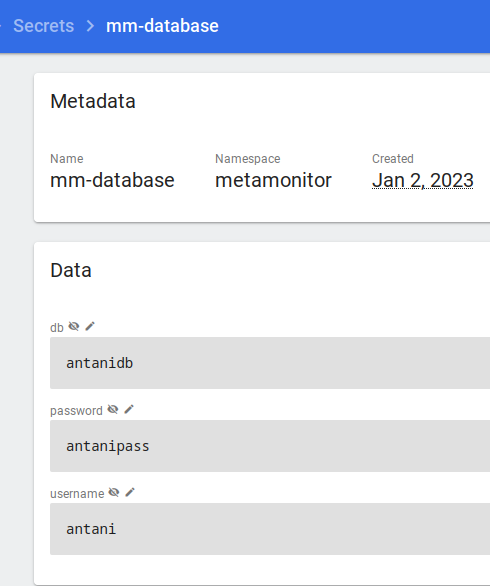

Kubernetes has a way to store data (although the default setting is quite unsafe) in a separate object, which can be recalled from many other places.

For example, we will create a Secret containing Postgres’ username, password and DB, which we will recall from both Postgres’ config, and Django’s.

microk8s kubectl create secret generic mm-database \

--from-literal=username=antani \

--from-literal=password=antanipass \

--from-literal=db=antanidb

Now we can replace the “env” section in our yaml file to recall this Secret, destroy the ReplicaSet and recreate.

Reachability

Postgres is running, but there is no “postgres” service running. There’s a “postgres” Service Set, but that generates a “postgres-0” pod.

So we need to set up a Service pointing to port 5432, matching all Pods with the tags we have set above, “app: metamonitor” and “service: postgres“

---

apiVersion: v1

kind: Service

metadata:

name: postgres

namespace: metamonitor

labels:

app.kubernetes.io/name: postgres

app.kubernetes.io/component: postgres

app.kubernetes.io/part-of: metamonitor

spec:

type: ClusterIP

selector:

app: metamonitor

component: postgres

ports:

- port: 5432

targetPort: sql

Now, “just” do the same for Redis, and then set up “alive” checks to make sure we’re not routing traffic to dead pods, and then…

Helm Charts

Or maybe not.

That was quite long and boring, wasn’t it?

Feels like installing by hand every single software and setting up initscripts (or systemd services, for you modern penguin hathchlings).

We could all use a package manager. Well, there’s one.

Helm charts are just that, curated (some more, some less) installation scripts with advanced configuration. For example, Bitnami’s Postgres helm chart can even set up replication on the fly.

All you gotta do is literally

microk8s helm repo add bitnami https://charts.bitnami.com/bitnami

microk8s helm install -n metamonitor postgres bitnami/postgresThis will take care of setting everything up, generating random passwords, saving them in secrets…

In case you want to pass values to the install, either do so with flags or for a more revision-control-friendly way download the “values.yaml” file and pass it with “-f values.yaml”.

Now do the same for Redis and InfluxDB.

Much easier, right?